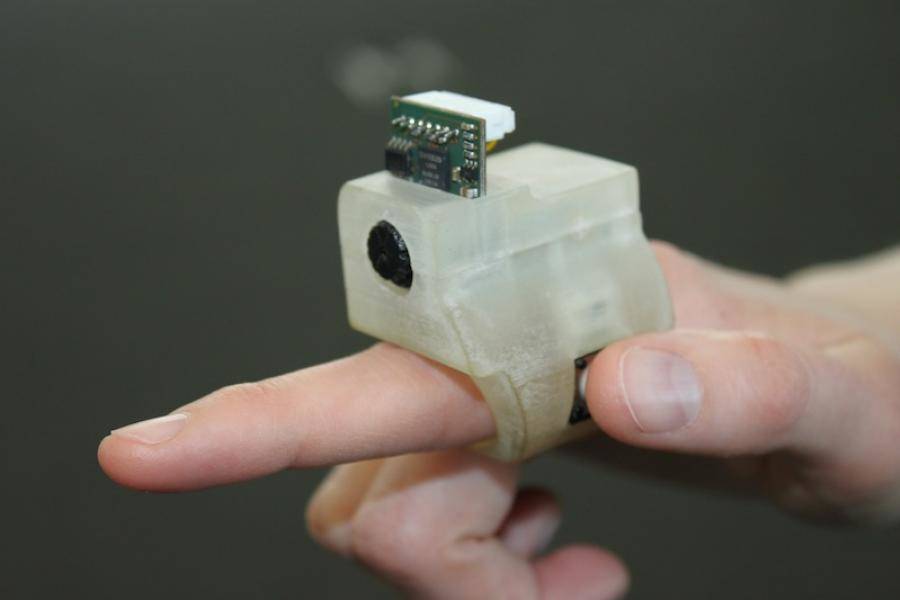

EyeRing is a wearable interface that allows using a pointing gesture or touching to access digital information about objects and the world. The idea of a micro camera worn as a ring on the index finger started as an experimental assistive technology for visually impaired persons, however soon enough we realized the potential for assistive interaction throughout the usability spectrum to children and visually-able adults as well.With a button on the side, which can be pushed with the thumb, the ring takes a picture or a video that is sent wirelessly to a mobile.

A computation element embodied as a mobile phone is in turn accompanied by the earpiece for information loopback. The finger-worn device is autonomous and wireless. A single button initiates the interaction. Information transferred to the phone is processed, and the results are transmitted to the headset for the user to hear.

Several videos about EyeRing have been made, one of which shows a visually impaired person making his way in a retail clothing environment where he is touching t-shirts on a rack, as he is trying to find his preferred color and size and he is trying to learn the price. He uses his EyeRing finger to point to a shirt to hear that it is color gray and he points to the pricetag to find out how much the shirt costs.

The researchers note that a user needs to pair the finger-worn device with the mobile phone application only once. Henceforth a Bluetooth connection will be automatically established when both are running.

The Android application on the mobile phone analyzes the image using the teams computer vision engine. The type of analysis and response depends on the pre-set mode, for example, color, distance, or currency. Upon analyzing the image data, the Android application uses a Text to Speech module to read out the information though a headset, according to the researchers.

The MIT group behind EyeRing are Suranga Nanayakkara, visiting faculty in the Fluid Interfaces group at MIT Media Lab and also a professor at Singapore University of Technology and Design; Roy Shilkrot, a first year doctoral student in the group; and Patricia Maes, associate professor and founder of the Media Labs Fluid Interfaces group.

The EyeRing in concept is promising but the team expects the prototype to evolve with more iterations to come. They are now at the stage where they want to prove it is a viable solution yet seek to make it better. The EyeRing creators say that their work is still very much a work in progress. The current implementation uses a TTL Serial JPEG Camera, 16 MHz AVR processor, Bluetooth module, 3.7V polymer Lithium-ion battery, 3.3V regulator, and a push button switch. They also look forward to a device that can carry advanced capabilities such as real-time video feed from the camera, higher computational power, and additional sensors like gyroscopes and a microphone. These capabilities are in development for the next prototype of EyeRing.

A Finger-worn Assistant The desire to replace an impaired human visual sense or augment a healthy one had a strong influence on the design and rationale behind EyeRing. To that end, we propose a system composed of a finger-worn device with an embedded camera, a computing element embodied as a mobile phone, and an earpiece for audio feedback. The finger-worn device is autonomous and wireless, and includes a single button to initiate the interaction. Information from the device is transferred to the computation element where it is processed, and the results are transmitted to the headset for the user to hear. Typically, a user would single click the pushbutton switch on the side of the ring using his thumb. At that moment, a snapshot is taken from the camera and the image is transferred via Bluetooth to the mobile phone. An Android application on the mobile phone then analyzes the image using our computer vision engine. Upon analyzing the image data, the Android application uses a Text-to-Speech module to read out the information though a hands-free head set. Users could change the preset mode by double-clicking the pushbutton and giving the system a brief verbal commands such as distance, color, currency, etc