Smart Home Technology

In Business, Official Blog, TechnicalSmart-Home Technology benefits the home-owners to monitor their Houses remotely, countering dangers such as a forgotten coffee maker left on or a front door left unlocked.

Smart homes are also beneficial for the elderly, providing monitoring that can help seniors to remain at home comfortably and safely, rather than moving to a nursing home or requiring 24/7 home care.

Unsurprisingly, smart homes can accommodate user preferences. For example, as soon as you arrive home, your garage door will open, the lights will go on, the fireplace will roar and your favorite tunes will start playing on your smart speakers.

Home automation also helps consumers improve efficiency. Instead of leaving the air conditioning on all day, a smart home system can learn your behaviors and make sure the house is cooled down by the time you arrive home from work. The same goes for appliances. And with a smart irrigation system, your lawn will only be watered when needed and with the exact amount of water necessary. With home automation, energy, water and other resources are used more efficiently, which helps save both natural resources and money for the consumer.

However, home automation systems have struggled to become mainstream, in part due to their technical nature. A drawback of smart homes is their perceived complexity; some people have difficulty with technology or will give up on it with the first annoyance. Smart home manufacturers and alliances are working on reducing complexity and improving the user experience to make it enjoyable and beneficial for users of all types and technical levels.

For home automation systems to be truly effective, devices must be inter-operable regardless of who manufactured them, using the same protocol or, at least, complementary ones. As it is such a nascent market, there is no gold standard for home automation yet. However, standard alliances are partnering with manufacturers and protocols to ensure inter-operability and a seamless user experience.

“Intelligence is the ability to adapt to change.”

–Stephan Hawking

How smart homes work/smart home implementation

Newly built homes are often constructed with smart home infrastructure in place. Older homes, on the other hand, can be retrofitted with smart technologies. While many smart home systems still run on X10 or Insteon, Bluetooth and Wi-Fi have grown in popularity.

Zigbee and Z-Wave are two of the most common home automation communications protocols in use today. Both mesh network technologies, they use short-range, low-power radio signals to connect smart home systems. Though both target the same smart home applications, Z-Wave has a range of 30 meters to Zigbee’s 10 meters, with Zigbee often perceived as the more complex of the two. Zigbee chips are available from multiple companies, while Z-Wave chips are only available from Sigma Designs.

A smart home is not disparate smart devices and appliances, but ones that work together to create a remotely controllable network. All devices are controlled by a master home automation controller, often called a smart home hub. The smart home hub is a hardware device that acts as the central point of the smart home system and is able to sense, process data and communicate wirelessly. It combines all of the disparate apps into a single smart home app that can be controlled remotely by homeowners. Examples of smart home hubs include Amazon Echo, Google Home, Insteon Hub Pro, Samsung SmartThings and Wink Hub, among others.

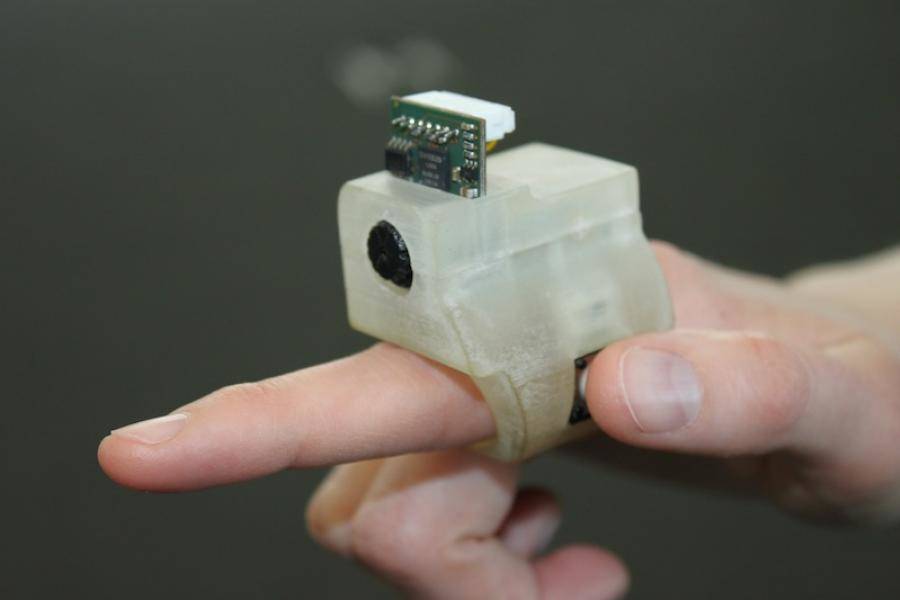

Some smart home systems can be created from scratch, for example, using a Raspberry Pi or other prototyping board. Others can be purchased as a bundled?smart home kit also known as a smart home platform that contains the pieces needed to start a home automation project.

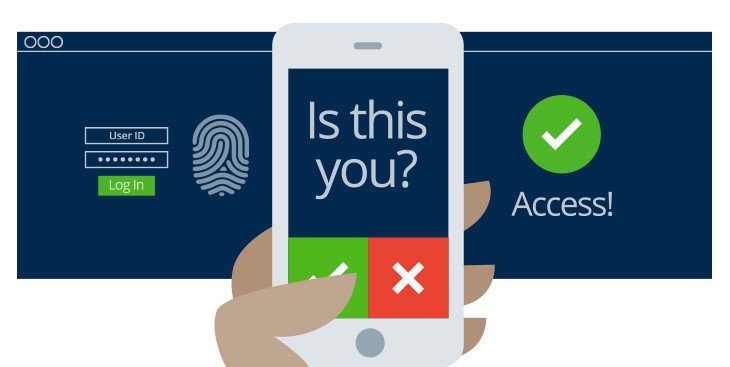

In simple smart home scenarios, events can be timed or triggered. Timed events are based on a clock, for example, lowering the blinds at 6:00 p.m., while triggered events depend on actions in the automated system; for example, when the owner’s smartphone approaches the door, the smart lock unlocks and the smart lights go on.

It involves the control and automation of lighting, heating (such as smart thermostats), ventilation, air conditioning (HVAC), and security (such as smart locks), as well as home appliances such as washer/dryers, ovens or refrigerators/freezers.WiFi is often used for remote monitoring and control. Home devices, when remotely monitored and controlled via the Internet, are an important constituent of the Internet of Things. Modern systems generally consist of switches and sensors connected to a central hub sometimes called a “gateway” from which the system is controlled with a user interface that is interacted either with a wall-mounted terminal, mobile phone software,tablet computer or a web interface, often but not always via Internet cloud services.

While there are many competing vendors, there are very few worldwide accepted industry standards and the smart home space is heavily fragmented. Manufacturers often prevent independent implementations by withholding documentation and by litigation.